Generative Adversarial Networks (GANs) operate through a competitive dynamic between two neural networks. The generator creates fake samples, while the discriminator evaluates their authenticity. They battle it out—generator trying to fool, discriminator trying to catch fakes. Initially, results are garbage. Over thousands of training epochs, the generator improves through feedback loops and backpropagation. Eventually, it produces hyper-realistic outputs like those non-existent faces you’ve probably seen. This AI arms race powers some of today’s most impressive synthetic content.

While artificial intelligence keeps making headlines, few people actually understand the inner workings of systems like GANs. These Generative Adversarial Networks might sound complicated, but their core concept is surprisingly straightforward. Two neural networks locked in a competitive battle. One creates, the other judges. Simple, right?

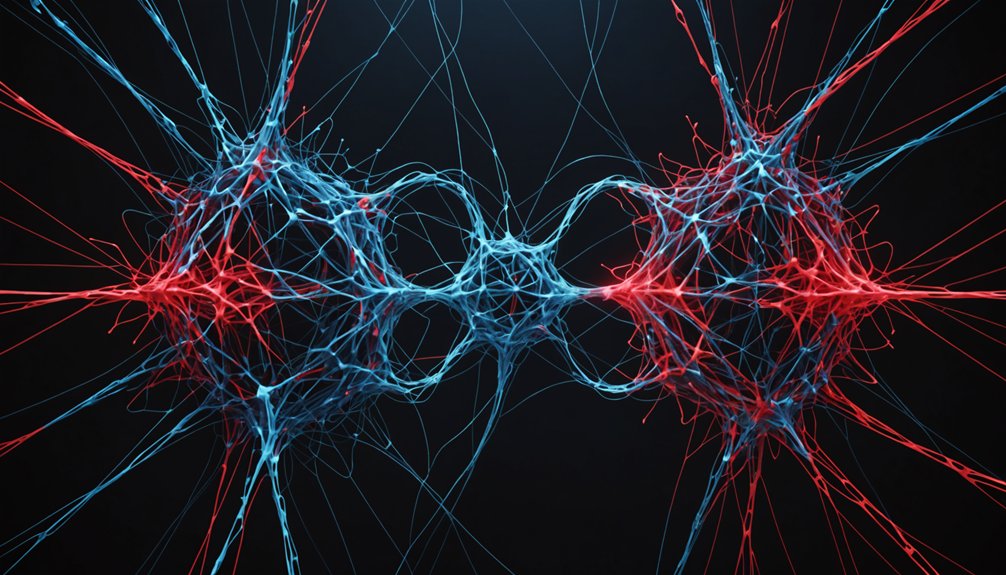

GANs consist of two main components: a generator and a discriminator. Think of the generator as an art forger, constantly trying to create fake paintings. The discriminator? That’s the art expert attempting to spot the forgeries. They’re playing an endless game of cat and mouse. The generator gets better at creating fakes, the discriminator improves at spotting them. Round and round they go. Like modern fraud detection systems, the discriminator continuously learns from new patterns to identify suspicious or anomalous data.

The magic happens in the training process. Both networks receive feedback through something called backpropagation. When the discriminator correctly identifies a fake, the generator learns from its mistakes. When the generator successfully fools the discriminator, it reinforces those successful patterns. It’s brutal, efficient, and surprisingly effective. Like reinforcement learning, this process optimizes behavior through trial and error to achieve better outcomes.

In this algorithmic battle of wits, failure becomes the greatest teacher for both creator and critic alike.

These networks typically train unsupervised, using mini-batches that combine both real and fake samples. The process isn’t perfect. Training can be unstable—problems like mode collapse or vanishing gradients make GANs notoriously difficult to work with. Sometimes thousands or even millions of epochs are needed for decent results. No wonder AI researchers drink so much coffee.

Initially, the generator produces garbage. Literally. Random noise that doesn’t look like anything. The discriminator easily spots these pathetic attempts. But over time, the quality improves. The fake faces start looking more human. The artificial landscapes become more realistic. The generator operates as a convolutional network, transforming random inputs into increasingly convincing outputs. This competitive dynamic drives both networks toward a Nash equilibrium where neither can improve without the other changing its strategy.

Eventually, if everything goes right, they reach an equilibrium where the generator creates samples so convincing that the discriminator can’t tell the difference between real and fake.

And that’s how you get those creepy, hyper-realistic faces of people who don’t exist. Cool technology. Slightly terrifying implications.

Frequently Asked Questions

What Ethical Concerns Arise From GANS Generating Realistic Fake Content?

GANs create major ethical headaches.

They’re behind deepfakes that spread misinformation, potentially disrupting democratic processes. No joke.

Privacy? Out the window. About 95% of deepfake videos use non-consensual images, especially for pornography. Devastating for victims.

Legal systems can’t keep up with this AI evolution. Public trust gets shattered when real and fake become indistinguishable.

Detection methods exist but it’s a constant cat-and-mouse game. The technology’s moving faster than our ethical frameworks.

How Do GANS Compare to Other Generative Models Like VAES?

GANs and VAEs take different paths to the same destination.

GANs excel at creating realistic images through adversarial training, while VAEs are probabilistic models using reconstruction loss.

The difference? GANs produce sharper images but suffer from instability and mode collapse.

VAEs? More stable but often blurry output.

GANs don’t explicitly define latent space, while VAEs do.

For image generation, GANs win. For understanding data structure, VAEs take the crown.

Simple as that.

Can GANS Be Applied to Non-Image Data Effectively?

Yes, GANs can tackle non-image data effectively. They’ve shown promise with audio, generating realistic music and speech patterns.

Text applications? Getting there. Time-series data? Definitely workable. The challenges are real though—representing complex structures and evaluating quality isn’t straightforward.

GANs struggle with balance issues and training instability, especially outside image domains. Still, they’re pushing boundaries. Research continues, and the potential is massive. Not perfect yet, but getting better.

What Computational Resources Are Required to Train Advanced GANS?

Training advanced GANs isn’t for the faint of hardware. They demand high-performance GPUs like NVIDIA Tesla V100 or A100, plus substantial RAM (16GB minimum).

The software stack typically includes TensorFlow or PyTorch. Cloud services offer alternatives for those without in-house muscle.

Training complexity skyrockets with larger datasets and sophisticated architectures. Optimization techniques help, but let’s be real—GANs are resource gluttons. No way around it.

How Are GANS Being Implemented in Creative Industries?

GANs are transforming creative industries big time.

They’re integrated into production workflows, not replacing humans but enhancing their work. Artists use them for generating unique artwork. Game developers? Creating realistic environments. Fashion designers predict trends with them.

They’re slashing project turnaround times through real-time collaboration. Plus, they’re great for data augmentation—crucial for training other AI models.

The content they produce? Often indistinguishable from human-made stuff. Pretty wild.