Tensors are multi-dimensional data containers that go beyond ordinary numbers, vectors, and matrices. Think of them as organizational tools that hold information in multiple directions simultaneously. They’re essential in physics for describing complex phenomena like relativity and electromagnetic fields. In machine learning, they process complex data for neural networks. Despite their intimidating reputation, tensors simply represent relationships between mathematical objects. Their power lies in maintaining consistency across different perspectives. The deeper mathematics reveals their true elegance.

Tensors. They’re everywhere in modern science, yet most people run screaming at their mention. Simply put, tensors are mathematical objects that describe relationships between other mathematical objects, typically in vector spaces. Think of them as data containers—flexible ones that can hold numbers in multiple dimensions. Not just rows and columns like matrices. More.

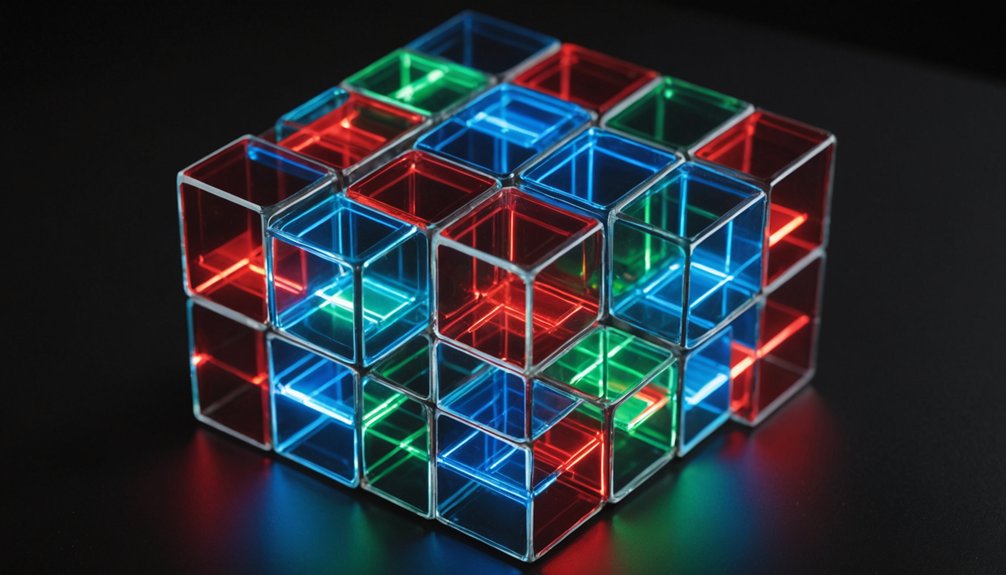

Tensors come in different orders. Scalars are zero-order tensors—just single numbers. Vectors are first-order, like arrows pointing in space. Then it gets interesting. Second-order tensors? Matrix-like structures. Beyond that? Multi-dimensional grids that would make your head spin.

Tensors escalate elegantly—from humble scalars to vectors to matrices and beyond into mind-bending dimensions most can’t visualize.

What makes tensors special isn’t their complexity. It’s their invariance. Change your coordinate system, flip it around, do whatever you want—tensors don’t care. Their properties remain unchanged. This is why physicists love them. Laws of nature shouldn’t depend on how you look at them, right?

In physics, tensors are workhorses. Einstein’s theory of relativity? Tensor-based. The stress in your car’s frame during a crash? Described by tensors. Electromagnetic fields? Yep, tensors again.

But tensors aren’t just for physics nerds. They’ve invaded machine learning and data science too. Those fancy neural networks processing your Instagram photos? They crunch tensors all day long. Computer graphics, engineering, materials science—tensors are there, quietly making calculations possible. Similar to how reinforcement learning optimizes decision-making, tensors enable AI systems to process complex multi-dimensional data effectively. Modern transformer models have revolutionized AI by using tensors to process and understand contextual relationships in data sequences.

People get confused because tensors look like matrices on paper. But that’s just representation. The essence is different. It’s like mistaking the actor for the character. Rookie mistake.

Tensor operations follow specific rules. You can add them, multiply them, contract them (which reduces their order), or take their product (which increases it). Index notation makes working with them less painful. Barely. The transformation rules allow components to change predictably when switching between coordinate systems.

Despite their importance, tensors remain widely misunderstood. They’re abstract. They’re multi-dimensional. They’re confusing. But they’re also incredibly useful tools for describing our complex, multi-dimensional world. The concept was popularized by mathematicians Levi-Civita and Ricci-Curbastro in 1900, revolutionizing differential geometry. That’s worth something.

Frequently Asked Questions

What Are Real-World Applications of Tensors Outside Physics?

Tensors aren’t just physics nerds’ playthings. They’re everywhere. In computer science, they power machine learning and neural networks.

Engineers use them for stress analysis in buildings—preventing collapses, basically. Data scientists love tensors for finding patterns in complex datasets.

Even economists use them to model market relationships. Tensor decomposition reduces computational complexity in massive systems.

They’re mathematical workhorses, honestly. Practical. Versatile. Indispensable in modern tech.

How Do Tensors Differ From Matrices?

Tensors trump matrices in dimensionality.

Matrices? Two dimensions only.

Tensors? As many as needed. Period.

They’re basis-independent geometric objects, maintaining properties across coordinate systems, while matrices shift with basis changes.

Tensors use complex operations like contraction and tensor products, going beyond basic matrix multiplication.

They’re everywhere—machine learning, engineering, statistics.

Not just a math curiosity.

Real-world workhorses with serious computational muscle.

Can Tensors Be Visualized in 3D Space?

Yes, tensors can be visualized in 3D space, though it’s not easy. Methods include glyph-based representations, eigenvector visualization, and color direction maps.

These techniques help scientists see complex tensor data in fields like medical imaging and engineering. Superquadric glyphs are pretty handy – they show tensor orientation and magnitude simultaneously.

Tensor visualization isn’t just math nerd territory anymore; it’s essential for understanding stress in materials and brain connectivity.

What Programming Libraries Are Best for Tensor Operations?

For tensor operations, TensorFlow and PyTorch dominate the scene.

They’re built specifically for handling complex tensor math in machine learning. NumPy works too, but it’s more basic.

JAX offers great performance optimizations without explicit computational graphs.

For other languages, Julia has TensorKit and TensorOperations, while C++ users turn to xtensor.

Your choice? Depends on whether you need deep learning support, performance, or user-friendly interfaces.

Are Neural Networks Related to Tensor Mathematics?

Neural networks and tensor mathematics are completely intertwined. No way around it.

Neural nets literally process data as tensors—those multi-dimensional arrays that handle complex information. The entire architecture depends on tensor operations: matrix multiplication, convolution, you name it.

Backpropagation? Tensor-based. Training algorithms? More tensors.

This relationship explains why frameworks like TensorFlow emerged—they’re built specifically to handle these mathematical structures efficiently.

No tensors, no modern AI. Simple as that.